by Sarah Hampton

In my last post, I discussed the ways AI can enhance formative assessment. In this post, let’s take a look at the AI example I’m most excited about and how it’s already benefited 11,000 teachers!

ASSISTments seems both practical and forward thinking, a unique combination. Sometimes it can be frustrating getting excited about new technologies when they’re still in development and not yet ready for the classroom. But, unlike many cutting edge projects I read about, ASSISTments is ready to implement now.

In their own words, “ASSISTments is more than an assessment tool. It improves the learning journey, translating student assessment to skill building and mastery, guiding students with immediate feedback, and giving teachers the time and data insights to drive every step of their lesson in a meaningful way.”

ASSISTments works through a 4 step process to help you get started:

- Create assignments.

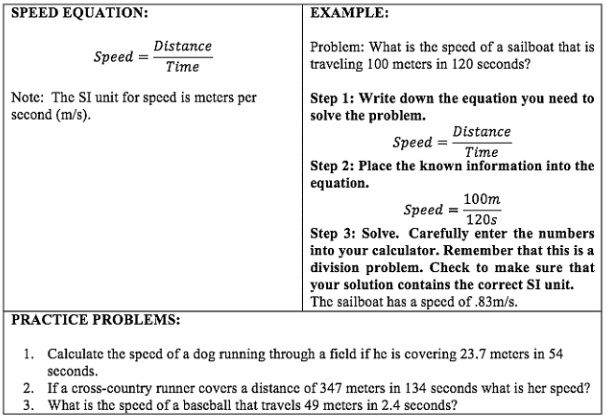

Teachers select questions from existing or custom question banks. I was really impressed with the number and variety of sets already on the site. There are question sets from select open educational resources, textbook curricula, and released state tests ready to be assigned. There are also pre-made general skill-building and problem-solving sets. Note, everything the students see is assigned by you, the teacher. - Assist students through immediate feedback.

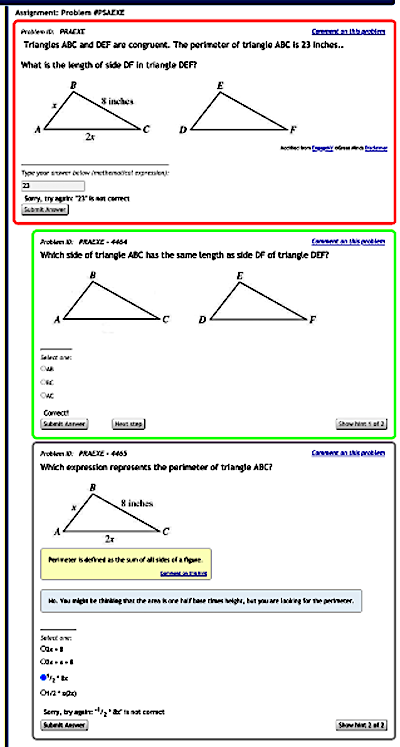

As students complete their assigned problems, they might receive hints and explanations to help them understand. Check out these screenshots of the platform. (See more in The ASSISTments Ecosystem: Building a Platform that Brings Scientists and Teachers Together for Minimally Invasive Research on Human Learning and Teaching) - Assess class performance.

Data is also available to the teacher. Check out how easy they make it for teachers to gauge student progress. - Analyze answers together (with your students).

After teachers see which problems were routinely missed, class time can be spent on the most needed concepts. As the ASSISTments site says, “Homework and classwork flow seamlessly into instruction of new material.” You can use the information you gain from the reports to determine what you will cover the next day. If everyone gets a concept you can move on and not waste valuable class time covering material that is understood. ASSISTments can also help support groups or personalized work.

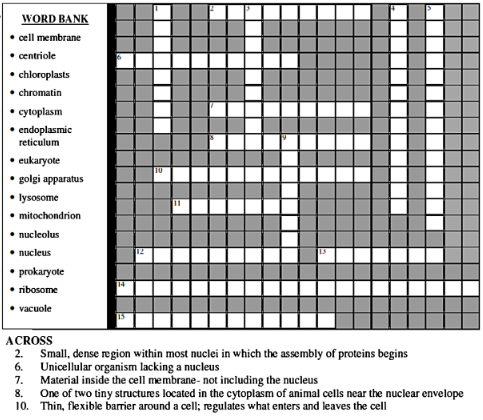

Figure: An ASSISTments message shown just before the student hits the “done” button, showing two different hints and one buggy message that can occur at different points.

Students immediately know if they’re right or wrong and can answer multiple times for partial credit, and, at the end of each assignment, each student receives an outcome report detailing their performance.

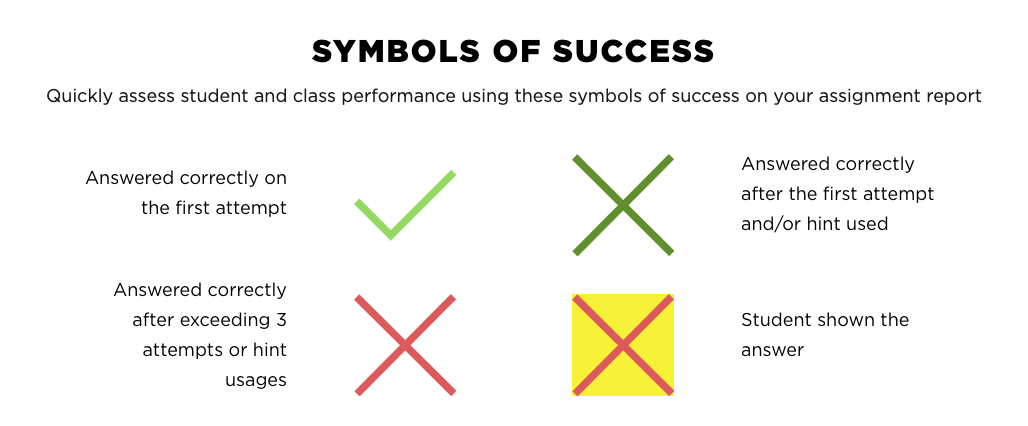

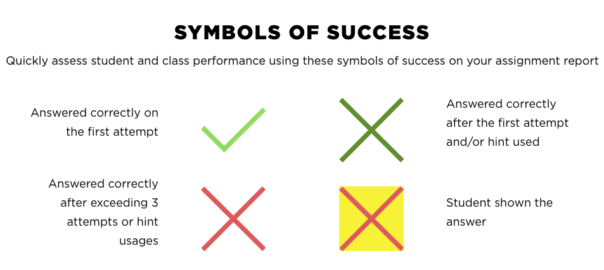

Figure. An easy way to visualize student performance.

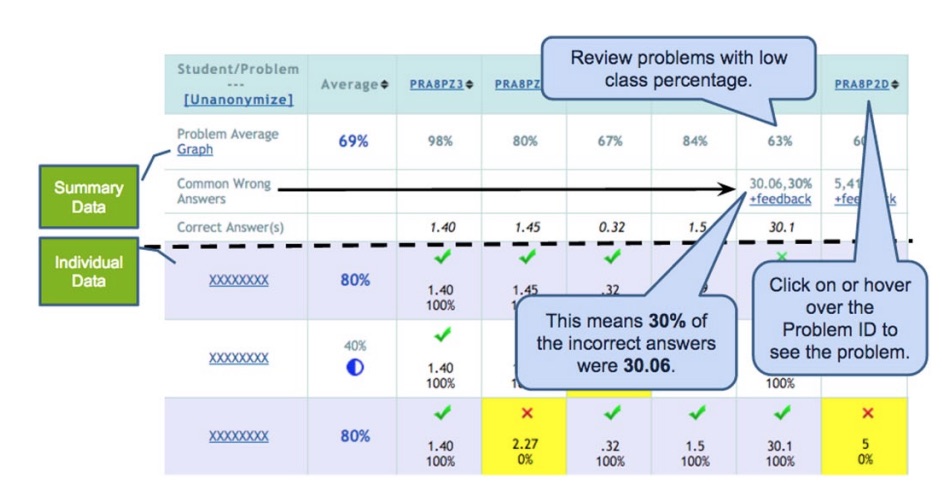

Figure. A popular ASSISTments report organizes student homework results in a grid–with tasks in columns and students in rows and enables teachers to quickly identify which problems to review and what the common errors were, as indicated by the annotations.

This four-step process models what needs to happen in effective formative assessment, which was discussed in the second post of this series. Students engage in an assessment for learning (in this case it’s their homework), receive specific, supportive, timely, and focused feedback on how to close the gap between their current and desired understanding, and the results of the assessment are used to drive the next learning encounter.

Based on the undergirding principles of formative assessment, it’s no surprise that ASSISTments meets the rigorous What Works Clearinghouse standards without reservation, and receives a strong rating as an evidence-based PK-12 program by Evidence for ESSA. Based on a randomized controlled trial examining 2,728 seventh grade students in Maine, on average, the use of ASSISTments “produced a positive impact on students’ mathematics achievement at the end of a school year” equivalent to a student at the 50th percentile without the intervention improving to the 58th percentile with it. In addition, as seen in other formative assessment studies, the largest gains were seen by students with low prior achievement. (Online Mathematics Homework Increases Student Achievement) ASSISTments helps you by helping the students who need it the most and seems to allow you to be in multiple places at once!

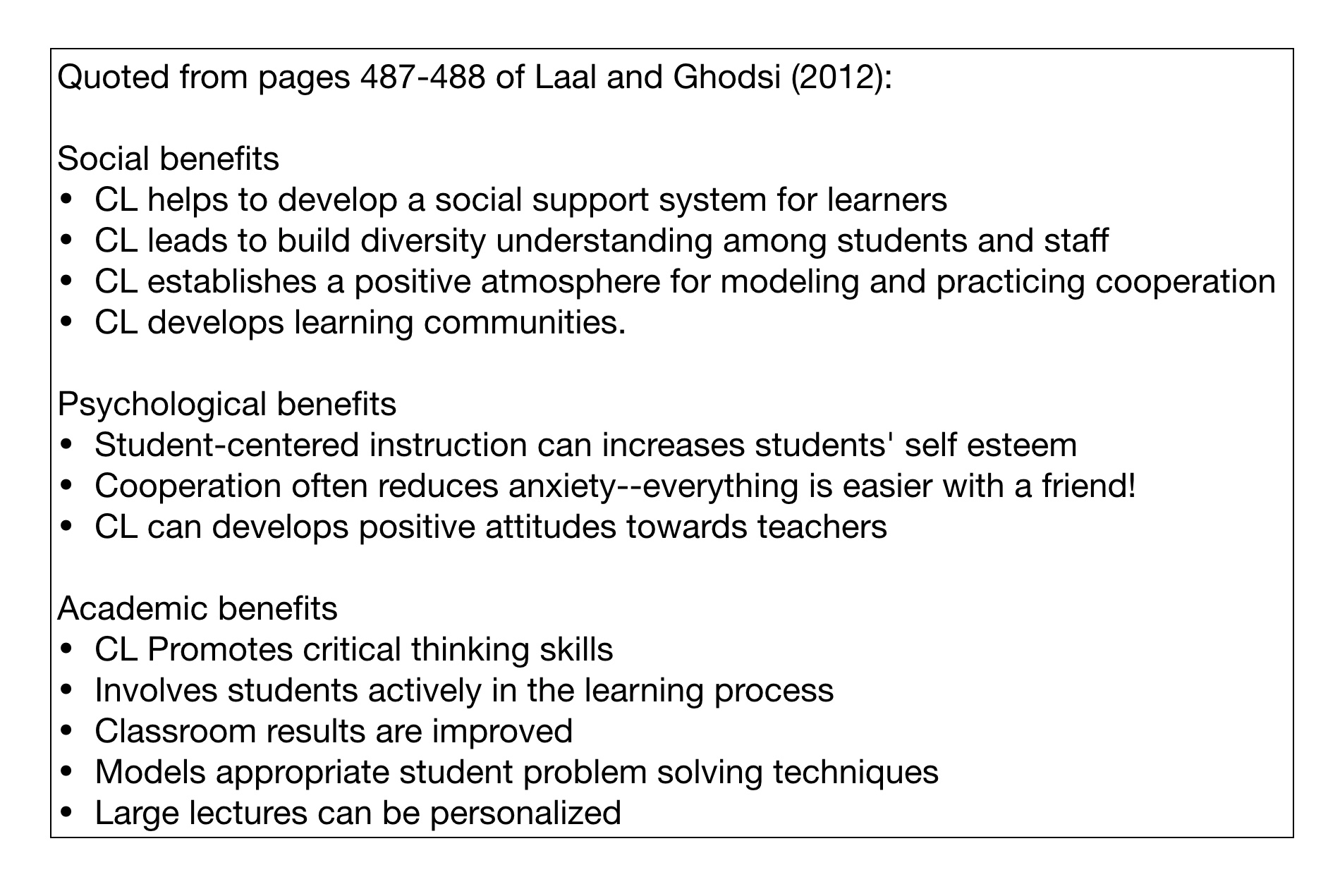

One of the reasons I’m so excited about this program is because it was thoughtfully designed with teachers and students in mind. Neil and Cristina Heffernen, the co-creators of ASSISTments, write this in The ASSISTments Ecosystem: Building a Platform that Brings Scientists and Teachers Together for Minimally Invasive Research on Human Learning and Teaching.

“In many ways the list of problem sets in ASSISTments is a replacement for the assignment from the textbook or the traditional worksheet with questions on it. This way the teachers do not have to make a drastic change to their curriculum in order to start using the system. But more importantly they can make more sense of the data they get back since they are the ones who selected and assigned the problems.This is in contrast to the idea of an artificial intelligence automatically deciding what problem is best for each student. While this is a neat idea, it takes the teacher out of the loop and makes the computer tutorial less relevant to what is going on in the classroom.”

Exactly! I want formative assessment–in and out of the classroom–to meaningfully guide my instruction. Furthermore, I really appreciate that ASSISTments was designed to give teachers assistance in the workflow, to inform them about what students are learning, and, more importantly, not learning, so that teachers can make an informed decision on how to best help their students. I hope including teachers in the design process and helping teachers work more effectively with their students becomes a standard for educational AIs.

You need a school verified Google Classroom or paid Canvas account to use it, but ASSISTments itself is free! Unfortunately, our school uses a basic Canvas account, but customer service at ASSISTments allowed me to have a teacher role using a personal account so I could fully explore the program. I’m hopeful that this can be a transformative homework solution for math students! I think it will be worth your time to see what ASSISTments can offer you.

Note, I am not affiliated with ASSISTments and was not paid or asked to write about ASSISTments. I learned about it from CIRCL, and I was intrigued because I teach mathematics, but everything I discovered about it was through my research and my excitement about its potential is my own.

Watch this short video to learn more about ASSISTments, and read more about co-creator Neil Heffernen in his CIRCL Perspective.

Thank you to ASSISTments’ co-creator Cristina Hefferenen and to James Lester for reviewing this post. We appreciate your work in AI and your work to bring educators and researchers together on this topic.

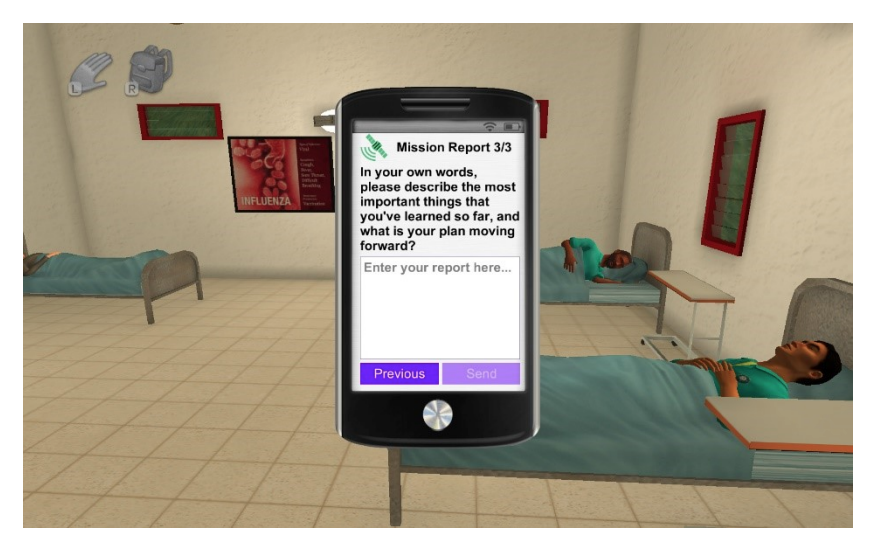

In-game reflection prompt presented to students in Crystal Island

In-game reflection prompt presented to students in Crystal Island