by Sarah Hampton

One of my favorite things about our Summer of AI is learning about cyberlearning projects and how they might benefit future students. In this post, I want to showcase three projects that caught my attention because they use AI in different ways and for different ages. When we began in June, I was thinking AI might be mostly about robots in STEM classes or general AIs like Siri or Alexa. But now, after learning about these three example projects and many more, I realize that the future might be more about specialized AIs giving teachers information and ways to personalize learning. Sometimes this is behind the scenes, like the first project I highlight. Sometimes, like the third project, a robot is used in Mandarin class (instead of in a technology class). Let us know what you think about these projects and their potential to change how you teach and learn @CIRCLeducators!

- Project:

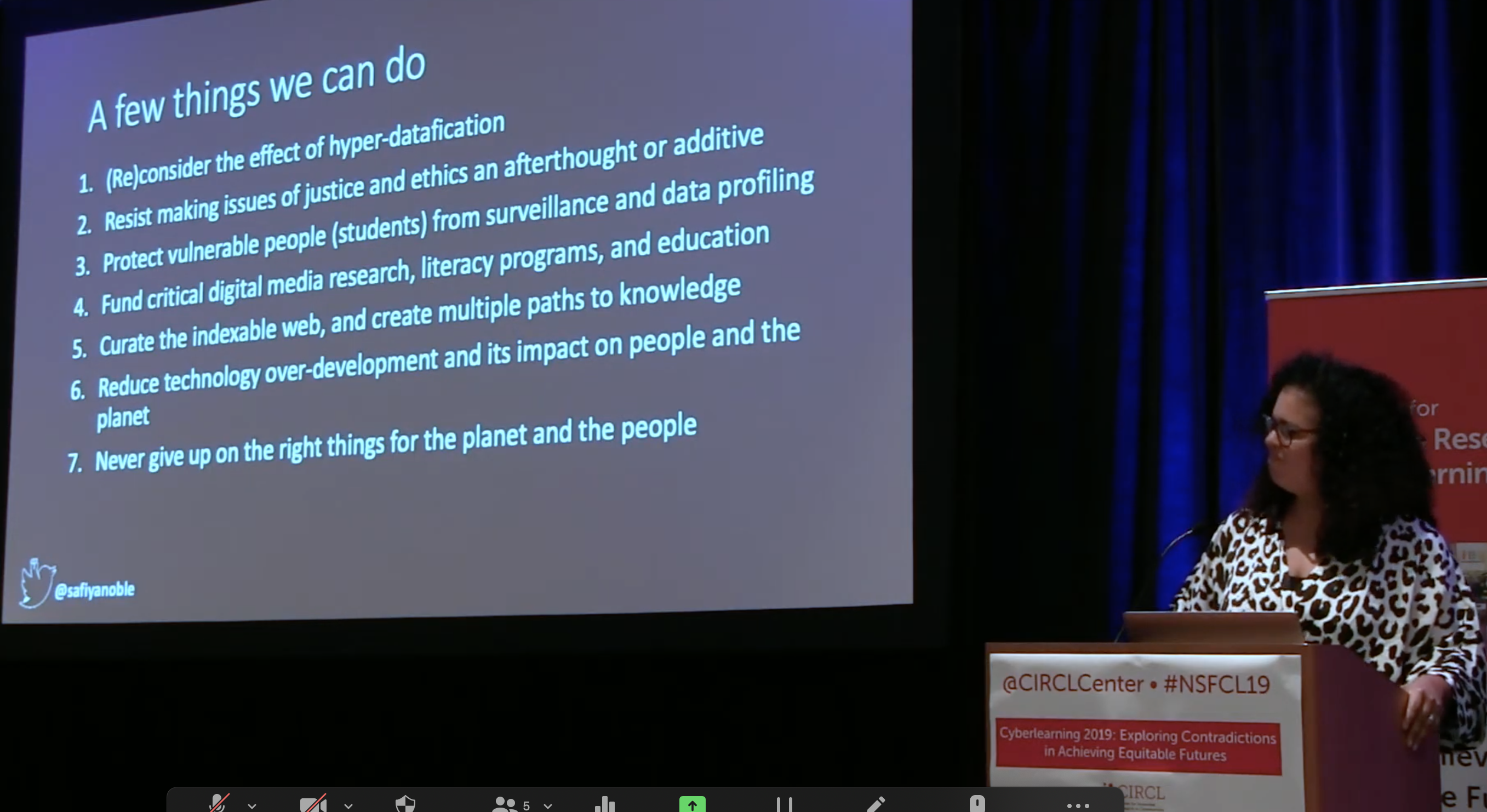

Human/AI Co-Orchestration of Dynamically-Differentiated Collaborative Classrooms

Figure 1. Left: A teacher using Lumilo while her students work with Lynette, an ITS for equation solving, in class (from Holstein et al., 2018b); Right: A point-of-view screenshot through Lumilo.

“This project will create and demonstrate new technology that supports dynamically-differentiated instruction for the classroom of the future. This new vision centers on carefully-designed partnerships between teachers, students, and artificial intelligence (AI). AI-powered learning software will support students during problem-solving practice, providing either individual guidance (using standard intelligent tutoring technology) or guidance so students can effectively collaborate and tutor each other. These learning activities are constantly adjusted to fit each student’s needs, including switching between individual or collaborative learning. The teacher “orchestrates” (instigates, oversees, and regulates) this dynamic process. New tools will enhance the teacher’s awareness of students’ classroom progress. The goal is to have highly effective and efficient learning processes for all students, and effective “orchestration support” for teachers.”

Why I’m Interested:

- Capitalizes on the strengths of students, teachers, and technology

- Creatively addresses differentiation and individualized instruction

- Promotes collaborative learning

- Relevant for all subjects

Learn More:

http://kenholstein.com/JLA_CodesignOrchestration.pdf

Teacher smart glasses (Lumilo)

- Project:

“The big question the PIs are addressing in this project is how to unobtrusively track silent reading of novice readers so as to be able to use an intelligent tutoring system to aid reading comprehension…This pilot project builds on previous work in vision and speech technology, sensor fusion, machine learning, user modeling, intelligent tutors, and eye movements in an effort to identify the feasibility of using eye tracking techniques, along with other information collected from an intelligent reading tutor, to predict reading difficulties of novice/young readers.”

“The project’s most important potential broader impacts is in establishing a foundation for exploiting gaze input to build intelligent computing systems that can be used to help children with reading difficulties learn to read and read to learn.”

Why I’m Interested:

- Targets reading comprehension which would help students in all subjects

- Could decrease student frustration

- May identify and intercept issues early translating to great academic gains over time

- Interacts personally with all students simultaneously in ways one teacher could not

- Allows for meaningful individual reading practice

Learn More:

Perhaps because this was a pilot program, no further information has been published. As a teacher looking toward the future and wanting to shape the conversation as it’s happening, I want to know more! I want to know what happened during this exploratory project and how similar projects could build on their work.

- Project

Transforming World Language Education using Social Robotics

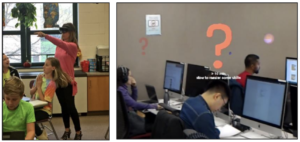

Figure 2. Students interacting with RALL-E robots.

“The social robot being developed in this project is designed to act as a language partner for students learning a foreign language, in this case those learning Chinese. It augments classroom instruction, providing for the learner a robot companion to converse with. The hypothesis is that social robots can make interactions with language speakers more exciting and more accessible, especially for less commonly taught languages. The embodied robot is designed not only to converse with learners but also to point and nod and gesture at particular people and objects, helping to direct the attention of learners and interact socially with learners in ways that a non-embodied simulation cannot.”

Why I’m Interested:

- Opens access for learning languages like Mandarin and Hindi that are spoken by hundreds of millions of people around the world but are not routinely offered in American schools

- Could easily be used in formal and informal settings

- Applies robotics beyond STEM subjects

Learn More:

https://circlcenter.org/interactive-robot-for-learning-chinese/

Thank you to James Lester for reviewing this post. We appreciate your work in AI and your work to bring educators and researchers together on this topic.

References

Holstein, K., McLaren, B. M., & Aleven, V. (2018b). Student learning benefits of a mixed-reality teacher awareness tool in AI-enhanced classrooms. In C. Penstein Rosé, R. Martínez-Maldonado, U. Hoppe, R. Luckin, M. Mavrikis, K. Porayska-Pomsta, B. McLaren, & B. du Boulay (Eds.), Proceedings of the 19th International Conference on Artificial Intelligence in Education (AIED 2018), 27–30 June 2018, London, UK. (pp. 154–168). Springer, Cham. http://dx.doi.org/doi.org/10.1007/978-3-319-93843-1_12