by Cassandra Kelley, Sarina Saran, Deniz Sonmez Unal, and Erin Walker

This blog post discusses the outcomes of an Educator CIRCLS workshop that disseminated computer science education research findings to practitioners while prompting broader discussions of AI in classrooms

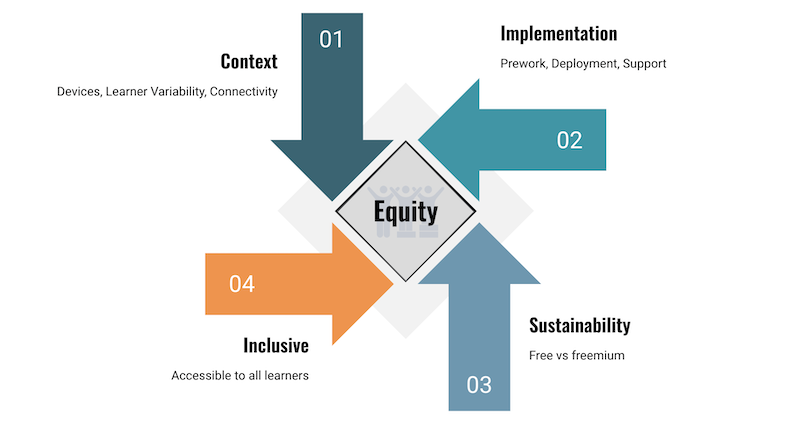

This past summer and fall of 2024, Educator CIRCLS hosted a series of webinars, workshops, and convenings between researchers and practitioners focused on artificial intelligence (AI) literacy. Specifically, they were designed to engage participants in reflective conversations about ethics, equity, and other problems or possibilities of practice concerning the integration of AI (especially genAI) in PreK-12 education.

As part of this series, our team from the University of Pittsburgh piloted a novel strategy for research dissemination, in which we developed supplemental curricular resources or guided activities and shared them with educators in a workshop format. The goals behind these activities were twofold:

- To facilitate discussion among educators about current research on the integration of emerging technologies that incorporate AI (e.g., robots and intelligent tutoring systems) and how they might impact the future of learning in education settings, and

- To provide a mechanism for educators to think critically about ways to introduce elements of AI literacy to students via real world exercises that can simulate the work that researchers are doing (see Translating Research on Emerging Technologies for Educators for further background context about the design of this workshop).

During the planning stage of the workshop, we felt it was pertinent to get a better understanding of PreK-12 teachers’ experiences with professional learning for computer science (CS) education. We wanted to speak directly with them about the impact of these experiences on their practice and seek their recommendations for how these professional development programs are designed.

We interviewed 20 educators from 16 states, who taught across different grade levels and/or content areas. Most interviewees felt a disconnect with research dissemination as a form of professional learning and expressed their desire to better understand how emerging technologies connect with research-based practices and learning theories. They discussed how previous workshops they have attended either focus directly on the technology tools or on a mandated “turnkey curriculum” based on rote memorization and knowledge transfer (e.g., Advanced Placement CS course materials). Teachers expressed how they appreciated receiving curricular resources because such resources help them to stay current in this ever-evolving field. They would like to see less “direct instruction” lessons and more real-world approaches with project-based or problem-based learning (PBL) that promote inquiry—similar to what is expected in the industry. They also emphasized the need for further collaborative opportunities to ideate on promoting digital/AI literacy through their instruction.

Following our conversations with teachers, we intentionally designed a workshop with guided activities, based on research projects on emerging technologies, that could expose practitioners to existing literature and findings while potentially seeding new ideas for curricula. Our workshop design incorporated the following structure: (1) outline the theoretical framework and CS concepts, (2) have participants experience different roles (e.g., student, educator, and researcher) within inquiry-based activities, (3) share project research findings, (4) discuss implications for practice and ways to address AI literacy, and (5) reflect on the overall format of the workshop and considering how to improve the design of future workshops.

We featured two research projects:

- Project 1: The design of intelligent robots with social behaviors and their potential roles in learning settings

- Project 2: Utilizing neuroadaptive learning technologies to assess a learners’ cognitive state with brain imaging

Our first session on teachable robots presented a research project that examined middle school students’ interactions with Nao robots in mathematics instruction. Participants were asked to think about the design and implementation challenges in building a robotic dialogue system for learning from the perspective of a student, educator, and a researcher. They explored CS concepts related to Natural Language Processing (NLP) by: (1) determining keywords used in solving a math problem, (2) reviewing sample dialogue scripts and Artificial Intelligence Mark-up Language (AIML) that researchers used to program the Nao robot, and (3) interacting with prototype simulations created in Pandorabots that represented social and nonsocial versions of a chatbot. We also shared further extensions that could potentially be remixed or adapted for use with students, such as revising the dialogue by adding more social elements, writing a new script for solving a different math problem in AIML, developing a chatbot to test the code, or experimenting with a program such as Scratch to create a dialogue between two sprites.

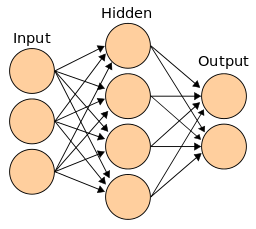

Our second session on neuroimaging and educational data-mining presented a research project that examined how students process information while interacting with intelligent tutoring systems. A major component of this study focused on the analysis of data collected by these systems to uncover patterns or trends that can inform and potentially improve teaching and learning practices. Additionally, neuroimaging brain data was collected as a proof of concept to explore how it might be analyzed to better understand how cognition, attention, and emotion affect learning (for further background on how this equipment works, see Neuroscience in Education). Similar to the first workshop, we presented guided activities to help participants think about the design of intelligent tutoring systems and the types of data collected ; participants created their own data visualizations from sample datasets for analysis using the free educational software, Common Online Data Analysis Platform (CODAP) and categorized example brain activation images based on the corresponding levels of task difficulty. Further extension activities were shared, such as outlining specific actions that an intelligent tutoring system might take to provide feedback (e.g., hints, prompting questions, or praise) in response to student behavior and debunking “neuro-myths” in education.

At the conclusion of each workshop, we asked educators their thoughts about the potential benefits and challenges of integrating these emerging technologies in PreK-12 classrooms and what they would like future research to explore. Our goal was to hear practitioner voices and gather input for researchers and developers to consider. This led to a focused discussion on the need to promote AI literacy in education, especially to address ethics and transparency.

Key takeaways from the experience are:

- Teachers appreciate the opportunity to learn more about innovative research projects, but they especially like the idea of being in dialogue with researchers and potentially playing a role in the work that’s being done. Many volunteered to pilot future projects exploring the implementation of curricula and/or emerging technologies with their students if invited.

- Teachers expressed that the content in our guided activities, while rigorous, enabled them to be more reflective. They were engaged with the hands-on simulations of the research and discussed how “active learning helped to promote deeper thinking.” As one participant mentioned, the activities allowed her to “think outside of the normal pedagogy box.”

- Teachers had mixed feelings on the relevance of the workshop content and how to bring it into their schools or classrooms. Some thought it would be challenging to implement the activities with students due to external factors and other curricular mandates. As one participant stated, “one tension with cutting-edge research is that it’s difficult to be practical in the moment. I think you’re on the right track with scaling down the technology or bringing the insights to the classroom level…this [workshop] is way more effective than most formats, but I think you would have a difficult time getting educators to opt in.” Meanwhile another participant said, “in both workshops, the concepts and practice of the teachable bot and neuroimaging was beyond the ‘here and now’ of teaching and learning, but the examination of how our current concepts of pedagogy may change as we catch up to the technology.” Additionally, several teachers discussed how the workshop offered new ways for them to think about bringing in real-world data and student-led projects to promote further inquiry and AI literacy.

- Teachers valued the opportunity to collaborate with other educators and researchers. They liked exploring different lenses (e.g., student, teacher, and researcher) while engaging in reflective discussions about the impact of research on their practice. One teacher highlighted how it felt like a “safe space to troubleshoot uses of AI and educational data mining” and another expressed appreciation for “garnering others’ experiences to get further ideas for their own classroom.”

Based on overall positive feedback from our teacher participants, we believe this research dissemination workshop model is worth exploring with other projects, especially since educators felt they were able to take something meaningful away from the experience. As one participant stated, “I feel very fortunate to be involved in this work. I’m very happy that your team is working to push the boundaries of how we learn and teach.” This gives us hope that researchers will consider the importance of collaborating and co-designing with educators. Additionally, this work validates the need for further mediation between research and practice, which potentially can include creating new roles for “knowledge brokers” (Levin, 2013) to promote further dialogue across these boundaries in order to truly make a broader impact.

Thank you to Sarah Hampton and Dr. Judi Fusco for their thinking and feedback on this post.

References:

Levin, B. (2013, February). To know is not enough: Research knowledge and its use. Review of education, 1(1), 2-31. DOI: 10.1002/rev3.3001

About the Authors

Cassandra Kelley, Ed.D. has over fifteen years of experience in K-12 and teacher education. She earned her doctorate degree in Learning Technologies from Pepperdine University and is passionate about exploring new tools that can improve teaching and learning. She currently serves as a Broader Impacts Project Coordinator at the University of Pittsburgh and supports CIRCLS with Expertise Exchanges in the AI CIRCLS and Educator CIRCLS sub communities. Cassandra also teaches graduate courses for National University in the Master of Science in Designing Instructional and Educational Technology (MSDIET) Program.

Sarina Saran is a third-year undergraduate student at the University of Pittsburgh pursuing a B.S. in Computer Science and a B.A. in Media and Professional Communications. She is curious about the intersection of technology and communication, and she has been able to develop a greater understanding of the challenges in this area as an Undergraduate Research Assistant in the Office of Broader Impacts.

Deniz Sonmez Unal is a Ph.D. candidate in Intelligent Systems at the University of Pittsburgh. Her research focuses on modeling student cognitive states using multimodal data, including interaction logs, verbal protocol data, and neural signals to enhance the diagnostic capabilities of intelligent tutoring systems.

Erin Walker, Ph.D. is a co-PI of CIRCLS and a tenured Associate Professor at the University of Pittsburgh, with joint appointments in Computer Science and the Learning Research and Development Center. She uses interdisciplinary methods to improve the design and implementation of educational technology, and then to understand when and why it is effective. Her current focus is to examine how artificial intelligence techniques can be applied to support social human- human and human agent learning interactions.