by Sarah Hampton

by Sarah Hampton

In my last post, I talked about effective formative assessments and their powerful impact on student learning. In this post, let’s explore why AI is well-suited for formative assessment.

- AI can offer individualized feedback on specific content.

- AI can offer individualized feedback that helps students learn how to learn.

- AI can provide meaningful formative assessment outside of school.

- AI might be able to assess complex and messy knowledge domains.

Individualized Feedback on Content Learning

I think individualized feedback is the most powerful advantage of AI for assessment. As a teacher, I can only be in one place at a time looking in one direction at a time. That means I have two choices for feedback: I can take some time to assess how each student is doing and then address general learning barriers as a class, or I can assess and give feedback to students one at a time. In contrast, AI allows for simultaneous individualized feedback for each student.

“AI applications can identify pedagogical materials and approaches adapted to the level of individual students, and make predictions, recommendations and decisions about the next steps of the learning process based on data from individual students. AI systems assist learners to master the subject at their own pace and provide teachers with suggestions on how to help them.” (Trustworthy artificial intelligence (AI) in education: promises and challenges)

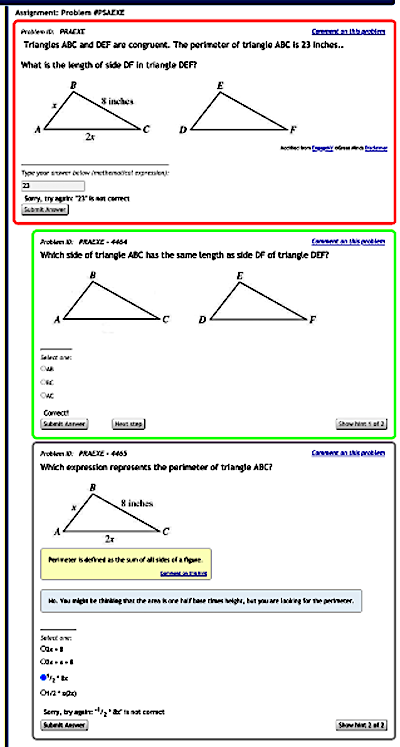

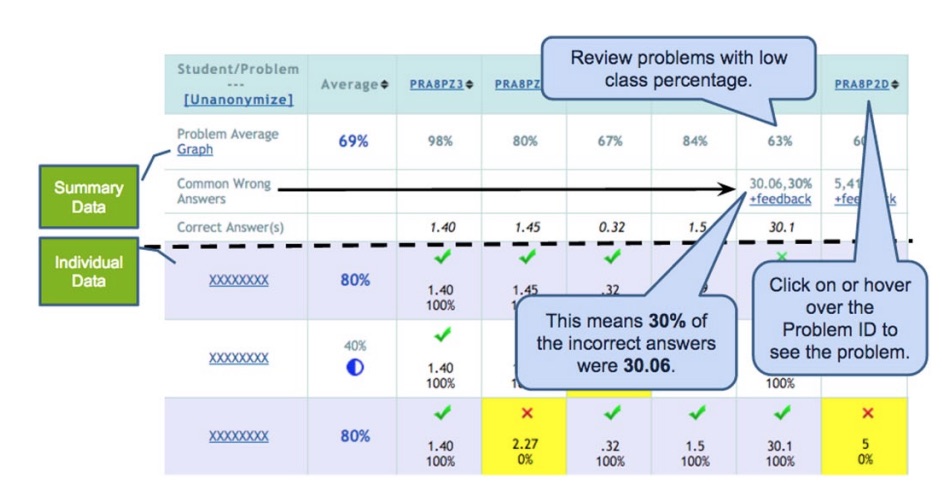

Going one step further, AI has the ability to assess students without disrupting their learning by something called stealth assessment. While students work, AI can quietly collect data in the background such as the time it takes to answer questions, which incorrect strategies they tried before succeeding, etc. and organize them into a dashboard so teachers can use that data to inform what to focus on or clear up the next day in class. Note: As a teacher, I want the AI to help me do what I do best. I definitely want to see what each student needs in their learning. Also, as a teacher, I want to be able to control when the AI should alert me about intervening (as a caring human) instead of it trying to do something on its own that it isn’t capable of doing well.

Feedback That Helps Students Learn How to Learn

“Two experimental research studies have shown that students who understand the learning objectives and assessment criteria and have opportunities to reflect on their work show greater improvement than those who do not (Fontana & Fernandes, 1994; Frederikson & White, 1997).” (The Concept of Formative Assessment)

In the last post, I noted that including students in the process of self-assessment is critical to effective formative assessment. After all, we ultimately want students to be able to self-regulate their own learning. But, as one teacher, it can sometimes be difficult to remind students individually to stop and reflect on their work and brainstorm ways to close the gap between their current understanding and their learning goal. By contrast, regulation prompts can be built into AI software so students routinely stop and check for understanding and defend their reasoning, giving students a start on learning how to self-regulate.

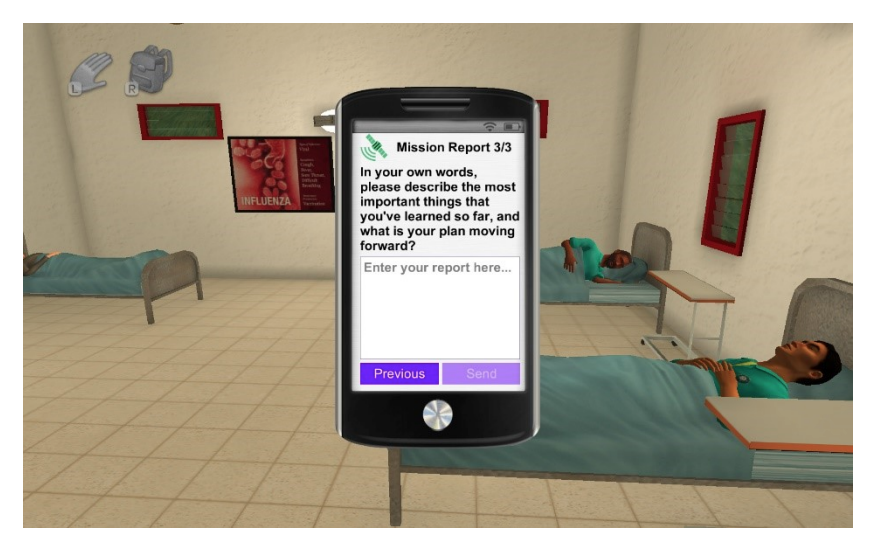

For example, this is done in Crystal Island, an AI game-based platform for learning middle school microbiology, “students were periodically prompted to reflect on what they had learned thus far and what they planned to do moving forward…Students received several prompts for reflection during the game. After completing the game or running out of time, students were asked to reflect on their problem-solving experience as a whole, explaining how they approached the problem and whether they would do anything differently if they were asked to solve a similar problem in the future.” (Automated Analysis of Middle School Students’ Written Reflections During Game-Based Learning)

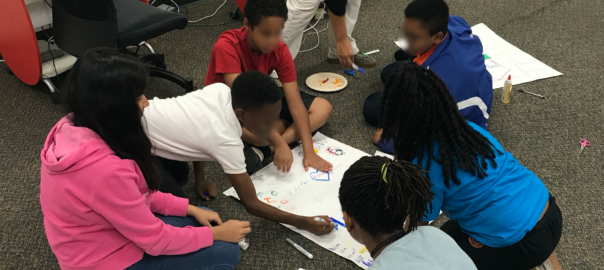

In-game reflection prompt presented to students in Crystal Island

In-game reflection prompt presented to students in Crystal Island

Meaningful Formative Assessment Outside of School

Formative assessment and feedback can come from many sources, but, traditionally, the main source is the teacher. Students only have access to their teacher inside the classroom and during class time. In contrast, AI software can provide meaningful formative assessment anytime and anywhere which means learning can occur anytime and anywhere, too.

In the next post, we’ll look at how one AI tool, ASSISTments, is using formative assessment to transform math homework by giving meaningful individualized feedback at home.

Assessing Complexity and Messiness

In the first post of the series, I discussed the need for assessments that can measure the beautiful complexity of what my students know. I particularly like the way Griffin, McGaw, and Care state it in Assessment and Teaching of 21st Century Skills:

“Traditional assessment methods typically fail to measure the high-level skills, knowledge, attitudes, and characteristics of self-directed and collaborative learning that are increasingly important for our global economy and fast-changing world. These skills are difficult to characterize and measure but critically important, more than ever. Traditional assessments are typically delivered via paper and pencil and are designed to be administered quickly and scored easily. In this way, they are tuned around what is easy to measure, rather than what is important to measure.”

We have to have assessments that can measure what is important and not just what is easy. AI has the potential to help with that.

For example, I can learn more about how much my students truly understand about a topic from reading a written response than a multiple choice response. However, it’s not possible to frequently assess students this way because of the time it takes to read and give feedback on each essay. (Consider some secondary teachers who see 150+ students a day!)

Fortunately, one major area for AI advancement has been in natural language processing. AIs designed to evaluate written and verbal ideas are quickly becoming more sophisticated and useful for providing helpful feedback to students. That means that my students could soon have access to a more thorough way to show what they know on a regular basis and receive more targeted feedback to better their understanding.

While the purpose of this post is to communicate the possible benefits of AI in education, it’s important to note that my excitement about these possibilities is not a carte blanche endorsement for them. Like all tools, AI has the potential to be used in beneficial or nefarious ways. There is a lot to consider as we think about AI and we’re just starting the conversation.

As AI advances and widespread classroom implementation becomes increasingly more possible, it’s time to seriously listen to those at the intersection of the learning sciences and artificial intelligence like Rose Luckin. “Socially, we need to engage teachers, learners, parents and other education stakeholders to work with scientists and policymakers to develop the ethical framework within which AI assessment can thrive and bring benefit.” (Towards artificial intelligence-based assessment systems)

Thank you to James Lester for reviewing this post. We appreciate your work in AI and your work to bring educators and researchers together on this topic.

We are still at the beginning of our conversation around AI in Education. What do you think? Do the possible benefits excite you? Do the possible risks concern you? Both? Let us know @EducatorCIRCLS.

Aaron Hawn

Aaron Hawn Nancy Foote

Nancy Foote

by Merijke Coenraad

by Merijke Coenraad by Sarah Hampton

by Sarah Hampton In-game reflection prompt presented to students in Crystal Island

In-game reflection prompt presented to students in Crystal Island