By Pati Ruiz, Sarah Hampton, Judi Fusco, Amar Abbott, and Angie Kalthoff

In October 2019 the CIRCL Educators gathered in Alexandria, Virginia for Cyberlearning 2019: Exploring Contradictions in Achieving Equitable Futures (CL19). For many of us on the CIRCL Educators’ team it was the first opportunity for us to meet in person after working collaboratively online for years. In addition, CL19 provided us with opportunities to explore learning in the context of working with technology and meet with researchers with diverse expertise and perspectives. We explored the tensions that arise as research teams expand the boundaries of learning, and explored how cyberlearning research might be applied in practice.

One of the topics, we thought a lot about at CL19, is algorithms. We had the opportunity to hear from keynote speaker Safiya Noble, an Associate Professor at UCLA, and author of a best-selling book on racist and sexist algorithmic bias in commercial search engines, Algorithms of Oppression: How Search Engines Reinforce Racism (NYU Press). In her Keynote, The Problems and Perils of Harnessing Big Data for Equity & Justice, Dr. Noble described the disturbing findings she uncovered when she started investigating algorithms related to search. She was not satisfied with the answer that the way algorithms categorized people, particularly girls of color, was what “the public” wanted. She dug in deeper and what she said really made us think.

This keynote is related to some of the conversations we’re having about Artificial Intelligence (AI), so we decided to re-watch the recorded version and discuss the implications of harnessing Big Data for students, teachers, schools, and districts. Big Data is crucial in much work related to AI. Algorithms are crucial. We bring this into our series on AI because even though math and numbers seem like they are not culturally-biased, there are ways that they are and can be used to promote discrimination. In this post, we don’t summarize the keynote, but we tell you what really got us thinking. We encourage you to watch it too.

Besides discussing algorithms for search, Dr. Noble also discusses implications of technology, data, and algorithms in the classroom. For example, Dr. Noble shared how she breaks down how a Learning Management System works for her students so that they know how the technology they are using can inform their professors of how often and how long they log into the system (among other things). She said they were often surprised that their teachers could learn these things. She went on to say:

“These are the kinds of things that are not transparent, even to the students that many of us are working with and care about so deeply. “

Another idea that particularly resonated with us, as teachers, from the talk is the social value of forgetting. Sometimes there is value in digitally preserving data, but sometimes there is more value in NOT documenting it.

“These are the kinds of things when we think about, what does it mean to just collect everything? Jean–François Blanchette writes about the social value of forgetting. There’s a reason why we forget, and it’s why juvenile records, for example, are sealed and don’t follow you into your future so you can have a chance at a future. What happens when we collect, when we use these new models that we’re developing, especially in educational contexts? I shudder to think that my 18-year-old self and the nonsense papers (quite frankly who’s writing a good paper when they’re 18) would follow me into my career? The private relationship of feedback and engagement that I’m trying to have with the faculty that taught me over the course of my career or have taught you over the course of your career, the experimentation with ideas that you can only do in that type of exchange between you and your instructor, the person you’re learning from, that being digitized and put into a system, a system that in turn could be commercialized and sold at some point, and then being data mineable. These are the kinds of real projects that are happening right now.”

We are now thinking a lot about how to help students and teachers better understand how our digital technology tools work, how we should balance the cost of using technology to help learners with the potential problem of hyper-datafication of saving everything and never letting a learner move past some of their history.

As we think through this tension, and other topics in the keynote, some of the questions that came up for us include:

- What information is being collected from our students and their families/homes and why? Where does the information go?

- Who is creating the app that is collecting the data? Are they connected to other programs/companies that can benefit from the data?

- What guidelines for privacy does the software company follow? FERPA/COPPA? Do there need to be more or updated standards? What policies aren’t yet in place that we need to protect students?

- What kinds of data is being digitally documented that could still be available years after a student has graduated? How could that impact them in job searches? Or, what happens when our students, who have documented their whole lives digitally, want to run for public office?

- There are well-documented protocols for destroying students’ physical work, so what documented protocols are in place for their digital work?

- Are school devices (e.g., Chromebooks or iPads) that contain student sensitive data being shared? Are all devices wiped between school years?

- Students clean out their desks and lockers at the end of the school year, should we be teaching them to clean out their devices?

- Do students have an alternative to using software or devices if they or their families have privacy concerns? Should they?

- Is someone in your district (or school) accountable for privacy evaluation, software selection, and responsible use?

- How are teachers being taught what to look for and evaluate in software?

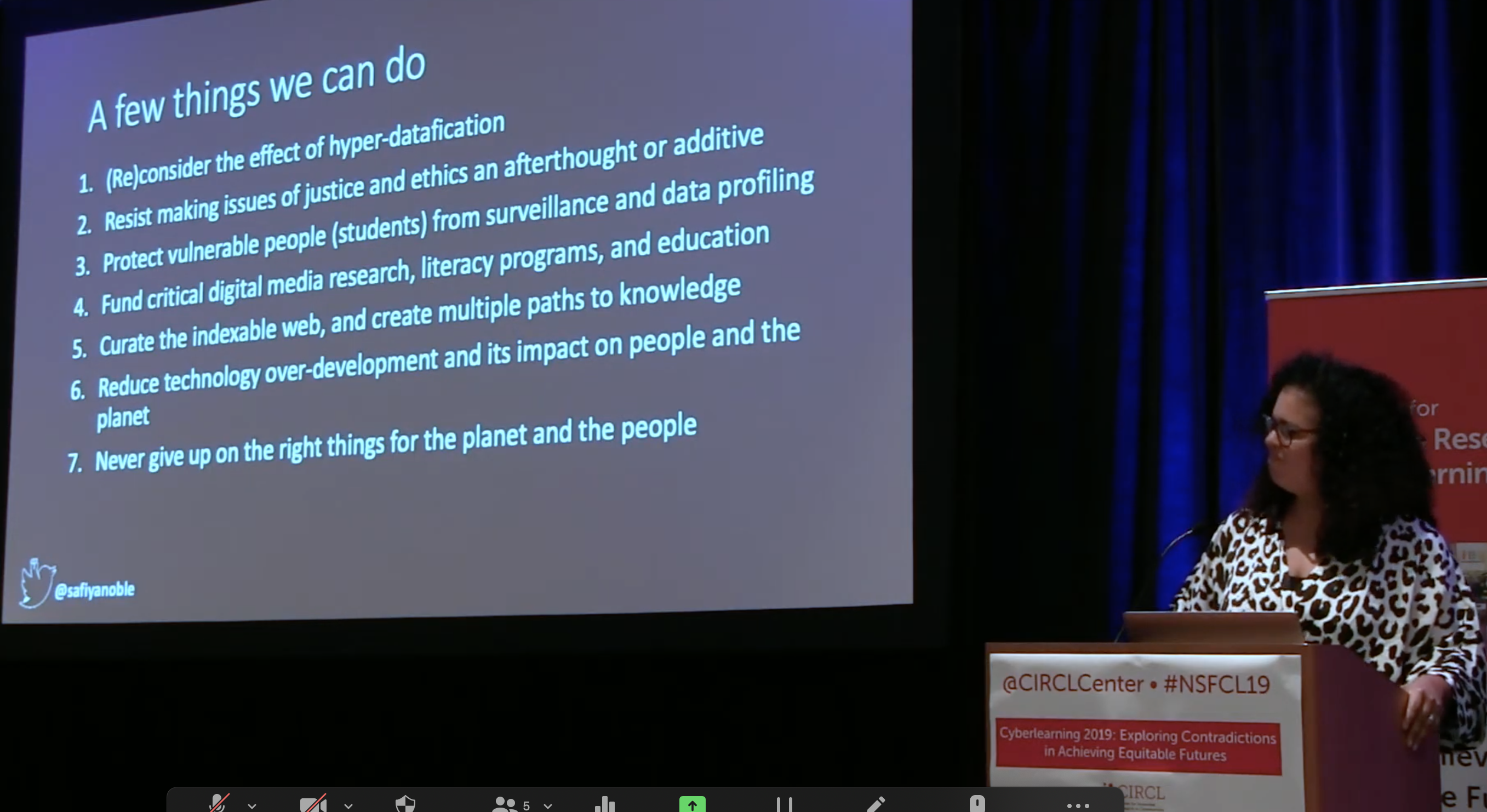

In future posts, we’ll cover some more of what Dr. Noble suggested based on her work including the following points she made:

- (Re)consider the effect of hyper-datafication

- Resist making issues of justice and ethics an afterthought or additive

- Protect vulnerable people (students) from surveillance and data profiling

- Fund critical digital media research, literacy programs, and education

- Curate the indexable web, create multiple paths to knowledge

- Reduce technology over-development and its impact on people and the planet

- Never give up on the right things for the planet and the people

Finally, some of us have already picked up a copy of Algorithms of Oppression: How Search Engines Reinforce Racism and if you read it, we would love to hear your thoughts about it. Tweet @CIRCLEducators. Also, let us know if you have questions or thoughts about the keynote and/or algorithms.